AI Liability Exposure

Artificial Intelligence has moved from experimentation to operational reality.

Across organisations, AI systems now influence decisions, processes, and outcomes with financial, legal, and reputational consequences.

In audits, incidents, supervisory inquiries, or litigation, AI governance is not assessed by intent, principles, or frameworks —

but by attribution, evidence, and decision defensibility.

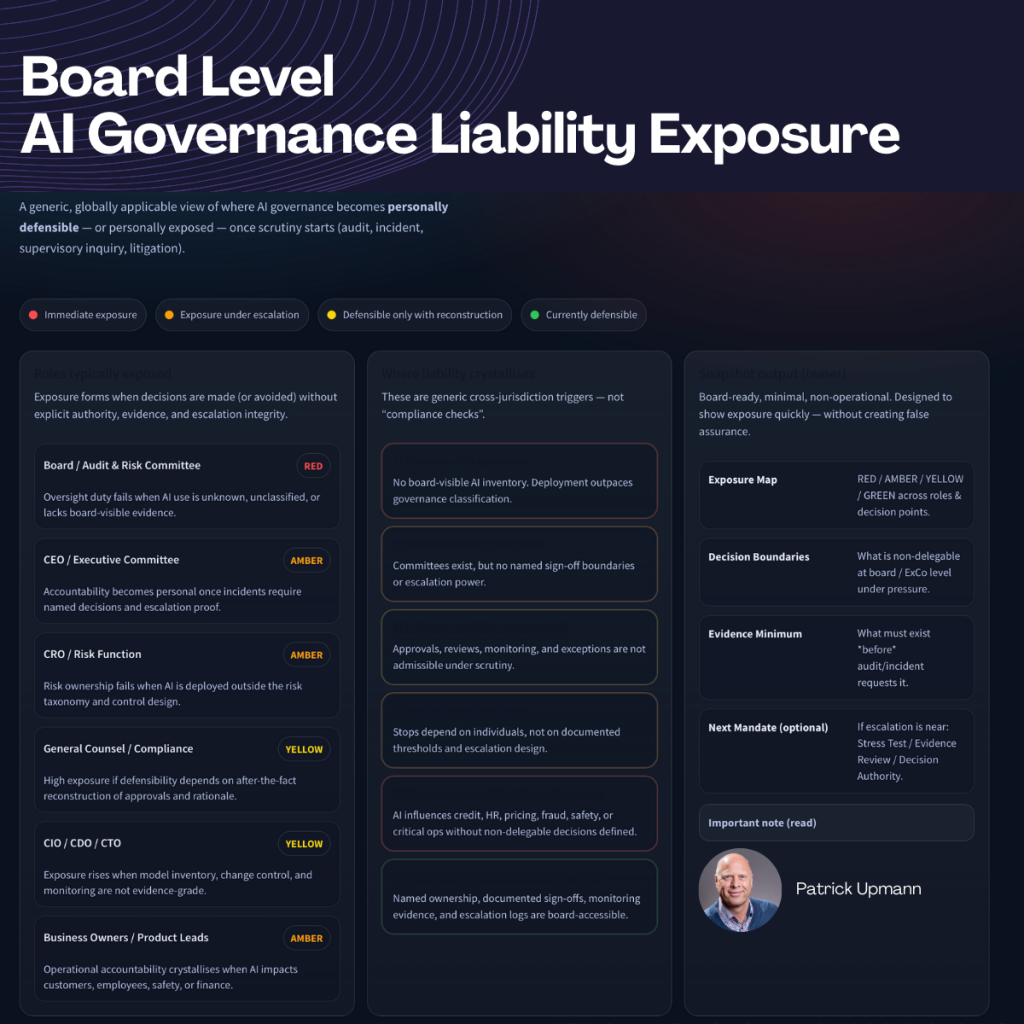

This page provides a board-level exposure perspective.

It shows where AI governance can be defended — and where responsibility becomes personal.

Legend

GREEN — Currently defensible

RED — Immediate personal exposure

AMBER — Exposure under escalation

YELLOW — Defensible only with reconstruction

Exposure Perspective

AI liability does not begin with regulation.

It begins when AI influences decisions, processes, or outcomes.

Exposure typically arises where:

- decision authority is implicit rather than explicitly assigned

- accountability is diffused across roles and committees

- evidence would need to be reconstructed after the fact

- escalation paths collapse under pressure

- high-stakes AI use exists without non-delegable board boundaries

Governance does not fail gradually.

It fails when responsibility becomes personal.

Purpose and Scope

What this is

- A board-level exposure lens

- A governance reality check under escalation assumptions

- A view of accountability, evidence, and decision authority

What this is not

- No compliance assessment

- No maturity model

- No certification

- No operational consulting

- No legal advice

This perspective is intentionally minimal and non-operational.

Decision Authority

Patrick Upmann operates at the point where AI governance can no longer be delegated, postponed, or abstracted into frameworks.

As architect of the AIGN OS and author of scientific governance research, his work began with system design — and moved into decision authority when boards repeatedly faced accountability gaps under audit, incidents, and liability pressure.

He is engaged when AI governance decisions must be taken, documented, and defended — not advised.

Board Engagement

If this exposure view raises questions you cannot delegate,

a board-level engagement can be initiated.

Request a Board-Level Engagement

Confidential · Time-boxed · Board-level only

Disclaimer

This page provides a generic, globally applicable governance exposure perspective.

It does not constitute legal advice, a compliance assessment, or certification.